Brain Machine Interface (BMI)

devices that enable its users to interact with computers by means of brain activity only

As a part of Brain Machine Interfaces module at Imperial we were tasked with developing a neural decoder capable of controlling a hypothetical

prosthetic device. The dataset was in form of spike trains collected using 98 electrodes inserted, under local anesthesia, into Macaque monkey brain.

The neural and positional data was collected at a rate of 1000Hz as monkey was performing planar hand movements.

In short, the algorithm had to estimate the precise trajectory of the monkey's hand as it reaches for the target based solely on the brain activity.

Implementation

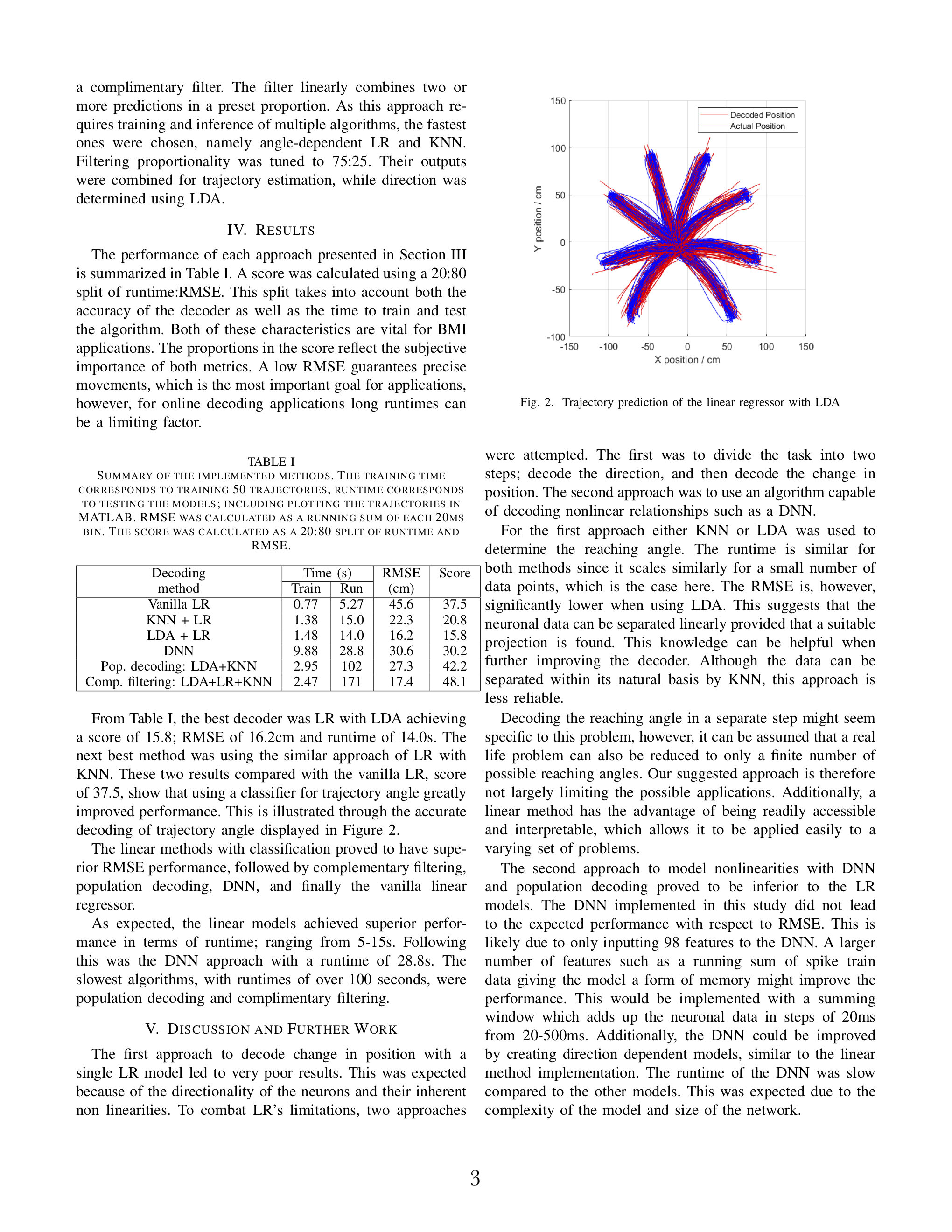

The fundamental task is to map the neural activity into real-world hand position. The data fed into the controller needs to be pre-processed to extract features of interest and set an appropriate format. The decoder can be created in multiple ways. The approach we proposed combined classification of movement direction followed by regression of trajectory. The graphic below presents the working of LDA classificator and Linear Regressor pair. Other combinations, including Deep Neural Networks, are presented in the paper below.

The report with an outline of decoding methods and comparison results

“The applications for neural interfaces are as unimaginable today as the smartphone was a few decades ago.”

Chris Toumazou FREng FMedSci, FRS, co-chair

Even though this project was focused on movement decoding, the potential implementations and benefits of BMIs are much broader. Those can range from device control to even direct, brain-to-brain communication. I hope to work one day on BMIs again and push the boundaries of what we know an inch further.